1. 대상서버의 vios와 Target 서버의 vios의 virtual adapter 설정에서 ...

> vscsi client의 target이 모두 'Any Partition' && 'Any Partition Slot'

> required 속성이 'No' 일 것

2. VIO 구성시 mpio 의 속성이 no_reserve !!!

>

lsdev -Cc disk | grep MPIO | awk '{print "chdev -l " $1 " -a reserve_policy=no_reserve "}'| sh –x

>

lsdev -Cc disk | grep MPIO | awk '{print "chdev -l " $1 " -a algorithm=round_robin "}' | sh -x

3. 각각의 vios와 HMC 가 SSH 통신이 가능해야 함

&& LPM의 대상 파티션에서 /.ssh/known_hosts 파일이 존재해야 함

(ssh hscroot@hmc.ipaddr 로 해당 파일을 생성해 줄 것)

-

http://www.ibm.com/developerworks/aix/library/au-LPM_troubleshooting/index.html

Basic understanding and troubleshooting of LPM

Introduction

Live Partition Mobility (LPM) was introduced on Power6. It helps to avoid downtime during VIOS and firmware updates when migrating to other frames. LPM also reduces the amount of work that is required while creating a new LPAR and set-up, which is required for the application.

A majority of customers perform LPM activities on a daily basis, and many may not know the exact procedure or what is taking place. This article shows steps to overcome or fix LPM issues.

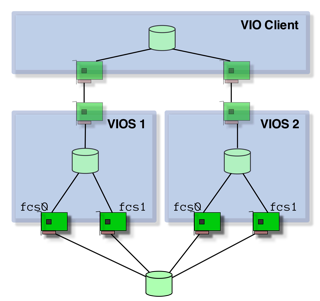

Figure 1. The AIX I/O stack

Back to top

LPM key points

Things to remember about LPM are that it migrates running partitions from one physical server to another while maintaining complete transactional integrity and transfers the entire environment: processor state, memory, virtual devices, and connected users. Partitions may also migrate while powered off (inactive migration), and the operating system and application must reside on shared storage.

Back to top

LPM prerequisites

You must have a minimum of two machines, a source and a destination, on POWER6 or higher with the Advanced Power Virtualization Feature enabled. The operating system and application must reside on a shared external storage (Storage Area Network). In addition to these hardware requirements, you must have:

- One hardware management console (optional) or IVM.

- Target system must have sufficient resources, like CPU and memory.

- LPAR should not have physical adapters.

Your virtual I/O servers (VIOS) must have a Shared Ethernet Adapter (SEA) configured to bridge to the same Ethernet network which the mobile partition uses. It must be capable of providing virtual access to all the disk resources which the mobile partition uses (NPIV or vSCSI). If you are using vSCSI, then the virtual target devices must be physical disks (not logical volumes).

You must be at AIX version 5.3J or later, VIOS version 1.4 or later, HMC V7R310 or later and the firmware at efw3.1 or later.

Back to top

What happens at the time of LPM

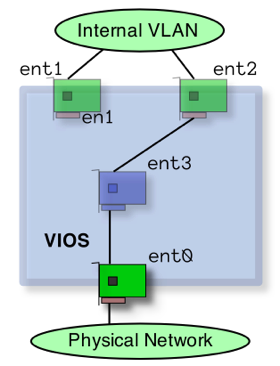

Figure 2. General LPM depiction

The following describes the general LPM depiction in Figure 2:

- Partition profile (presently active) copied from source to target FSP.

- Storage is configured on the Target.

- Mover service partitions (MSP) is activated.

- Partition migration started.

- Majority of memory pages moved.

- All threads piped down.

- Activation resumed on target.

- Final memory pages moved.

- Cleanup storage and network traffic.

- Storage resources are deconfigured from the source.

- Partition profile removed from source FSP (Flexible Service Processor).

Back to top

How to do LPM

Before doing LPM, we need to verify the availability of resources on both the source and destination side. If validation fails with some error, then we have to fix it to proceed further. Sometimes validation may end up with warning messages which can be ignored.

LPM using HMC GUI

Figure 3 shows you how to validate the LPAR with the HMC GUI.

From the System management -> Servers -> Trim screen, select the LPAR name: Operations -> Mobility -> Validate

Figure 3. Validating the LPAR

The validate screen, shown in Figure 4, shows that upt0052 LPAR is validated for migration from trsim to dash, and if needed, we have to specify the destination HMC.

Figure 4. Validation window

Figure 5 show that the LPM has ended with a warning message, ignore the message and select Close to continue with the migration.

Figure 5. Validation passed with general warning message

Figure 6, the Partition Migration Validation screen, shows that the information is selected to set up a migration of the partition to a different managed system. Select Migrate to verify the information.

Figure 6. Ready for migration after validation passed

When the migration completes, as shown in Figure 7, select Close

Figure 7. Migration progressing

HMC using commandline option

To validate the LPM in local HMC, enter the following:

migrlpar -o v -m [source cec] -t [target cec] -p [lpar to migrate]

|

To validate the LPM in the Remote HMC, type:

migrlpar -o v -m [source cec] -t [target cec] -p [lpar to migrate] \

> --ip [target hmc] -u [remote user]

|

Note, you may prefer to use the hscroot command as the remote user.

Use the following migration command for LPM in the local HMC:

migrlpar -o m -m [source cec] -t [target cec] -p [lpar to migrate]

|

The following migration command for LPM is used with the remote HMC:

migrlpar -o m -m [source cec] -t [target cec] -p [lpar to migrate] \

> --ip [target hmc] -u [remote user]

|

In case of MPIO (Multipath IO) failure of a LPAR due to configuration issues between source and destination, type the following to proceed (if applicable):

migrlpar -o m -m wilma -t visa -p upt07 --redundantpgvios 0 -n upt07_n

oams_npiv -u hscroot --vlanbridge 2 --mpio 2 -w 60 -d 5 -v -i

"source_msp+name=wilmav2,dest_msp_name=visav2" --ip destiny4

|

Back to top

Troubleshooting

This section covers various errors messages you might encounter and ways to correct them.

- If LPM needs to be done across two different HMCs, in case of migration, the appropriate authorization between HMCs needs to be set. If proper authorization is not set, the following

mkauthkey error displays:hscroot@destiny4:~> migrlpar -o v -m trim -p UPT0052 --ip hmc-arizona -u

hscroot -t arizona

HSCL3653 The Secure Shell (SSH) communication configuration between the source

and target Hardware Management Consoles has not been set up properly for user

hscroot. Please run the mkauthkeys command to set up the SSH communication

authentication keys.

|

To overcome this error, type the following:

hscroot@destiny4:~> mkauthkeys -g --ip hmc-arizona -u hscroot

Enter the password for user hscroot on the remote host hmc-arizona

|

- If migrating an POWER7 Advanced Memory Expansion (AME) partition, to any of the POWER6 machines, the following error displays:

hscroot@destiny4:~> migrlpar -o v -m trim -p

UPT0052 --ip hmc-liken -u hscroot -t wilma

HSCLA318 The migration command issued to the destination HMC failed with the

following error: HSCLA335 The Hardware Management Console for the destination

managed system does not support one or more capabilities required to perform

this operation. The unsupported capability codes are as follows: AME_capability

hscroot@destiny4:~>

|

To correct this error either migrate to POWER7 or remove the AME and then migrate.

- If you are doing a migration of an Active Memory Sharing (AMS) partition with improper AMS setup or no free paging device on the destination side, the following error displays:

hscroot@hmc-liken:~> migrlpar -o v -m wilma -t visa --ip destiny4 -u hscroot -p

upt0060 --mpio 2

Errors:

HSCLA304 A suitable shared memory pool for the mobile partition was not found on the

destination managed system. In order to support the mobile partitions, the

destination managed system must have a shared memory pool that can accommodate the

partition's entitled and maximum memory values, as well ad its redundant paging

requirements. If the destination managed system has a shared memory pool, inability

to support the mobile shared memory partition can be due to lack of sufficient memory

in the pool, or lack of a paging space device in the pool that meets the mobile

partition's redundancy and size requirements.

Details:

HSCLA297 The DLPAR Resource Manager (DRM) capability bits )x) for mover service

partition (MSP) visav2 indicate that partition mobility functions are not supported

on the partition.

HSCLA2FF An internal Hardware Management Console error has occurred. If this error

persists, contact your service representative.

|

To correct this error do either, or both, of the following:

- Since this problem is related to redundant AMS setup, the destination AMS pool should have redundant capability for an AMS pool defined as Shared Memory Pool with two Paging VIOS partitions for high availability HMC only. Users can select primary and alternate paging VIOS for each Shared Memory Partition. For any details related to AMS, please refer to "Configuring Active Memory Sharing from a customer's experience" (developerWorks, Aug 2009) for more information.

- A sufficient space for paging device should be present in the target AMS pool.

- If we try to migrate a LPAR from Power7 to Power6 CPU, we get the following error:

hscroot@destiny4:~> migrlpar -o v -m dash -t arizona --ip hmc-arizona -u hscroot

-p upt0053

Errors:

HSCLA224 The partition cannot be migrated because it has been designated to use a

processor compatibility level that is not supported by the destination managed

system. Use the HMC to configure a level that is compatible with the destination

managed system.

|

The solution for the above error could be one of the following:

In cases where the mapping is correct, and you are still getting the same error, it may be due to having different types of FC adapters between source and destination MSP. For mapping methods, refer to last Note section of "Troubleshooting".

- In the destination CEC, if the LPAR has insufficient CPUs, then we get the following error:

hscpe@destiny4:~> migrlpar -o v -m dash -t wilma -p upt0053 --ip defiant2 -u

hscroothmc-arizona -u hscroot

Errors:

The partition cannot be migrated because the processing resources it requires

exceeds the available processing resources in the destination managed system's

shared processor pool. If possible, free up processing resources from that shared

processor pool and try the operation again.

|

And the solution is:

- We need to reduce the CPU in LPAR by DLPAR or change the profile.

- We can increase the number of processors at destination machine by reducing the number of processor units using DLPAR operation on a few of its clients (if applicable).

- If the destination CEC does not have sufficient memory, then:

hscpe@destiny4:~> migrlpar -o v -m extra5 -t dash -p upt0027

Errors:

There is not enough memory: Obtained: 2816, Required: 4608. Check that there is

enough memory available to activate the partition. If not, create a new profile or

modify the existing profile with the available resources, then activate the

partition. If the partition must be activated with these resources, deactivate any

running partition or partitions using the resource, then activate this partition.

|

And, the solution is either:

- We need to reduce the amount of memory in LPAR by using DLPAR operation or by changing the profile; or,

- We can increase the memory at the destination machine by reducing the memory of any other LPARs using DLPAR operation.

If the RMC (Resource Monitoring and Control) connection is not established among the source, target VIOS's and LPAR, then we may get following error:

hscpe@destiny4:~> migrlpar -o v -m dash -t trim -p upt0053

Errors:

The operation to check partition upt0053 for migration readiness has failed.

The partition command is:

drmgr -m -c pmig -p check -d 1

The partition standard error is:

HSCLA257 The migrating partition has returned a failure response to the HMC's

request to perform a check for migration readiness. The migrating partition in

not ready for migration at this time. Try the operation again later.

Details:

HSCLA29A The RMC command issued to partition upt0053 failed. \

The partition commend is:

drmgr -m -c pmig -p check -d 1

The RMC return code is:

1141

The OS command return code is:

0

The OS standard out is:

Network interruption occurs while RMC is waiting for the execution of the command

on the partition to finish.

Either the partition has crashed, the operation has caused CPU starvation, or

IBM.DRM has crashed in the middle of the operation.

The operation could have completed successfully. (40007) (null)

The OS standard err is:

|

To fix this problem, refer to "Dynamic LPAR tips and checklists for RMC authentication and authorization" (developerWorks, Feb 2005) for more information.

- If the partition you are trying to migrate is having MPIO with dual VIOS setup, and the target having dual VIOS but not set up properly for MPIO, then we may get error listed below:

hscroote@hmc-liken:~> migrlpar -o v -m wilma -t visa --ip destiny4 -u hscroot -p

upt0060

Errors:

HSCLA340 The HMC may not be able to replicate the source multipath I/O

configuration for the migrating partition's virtual I/O adapters on the

destination. This means one or both of the following: (1) Client adapters

that are assigned to different source VIOS hosts may be assigned to a single

VIOS host on the destination; (2) Client adapters that are assigned to a single

source VIOS host may be assigned to different VIOS hosts on the destination.

You can review the complete list of HMC-chosen mappings by issuing the command

to list the virtual I/O mappings for the migrating partition.

HSCLA304 A suitable shared memory pool for the mobile partition was not found

on the destination managed system. In order to support the mobile partition,

the destination managed system must have a shared memory pool that can

accommodate the partition's entitled and maximum memory values, as well as its

redundant paging requirements. If the destination managed system has a shared

memory pool, inability to support the mobile shared memory partition can be due

to lack of sufficient memory in the pool, or lack of a paging space device in

the pool that meets the mobile partition's redundancy and size requirements.

Details:

HSCLA297 The DLPAR Resource Manager (DRM) capability bits 0x0 for mover service

partition (MSP) visav2 indicate that partition mobility functions are not

supported on the partition.

HSCLA2FF An internal Hardware Management Console error has occurred. If this

error persists, contact your service representative.

Warning:

HSCLA246 The HMC cannot communicate migration commands to the partition visav2.

Either the network connection is not available or the partition does not have a

level of software that is capable of supporting partition migration. Verify the

correct network and migration setup of the partition, and try the operation

again.

|

The solution is:

- Check the correctness of dual VIOS, availability of adapters, mappings in SAN and switch.

If above solution is not feasible to implement then:

- Use

--mpio 2 with the migrlpar command. By using this, we may lose dual VIOS setup for MPIO disks. Generally this is not a recommended solution by PowerVM.

- If the Source VIOS is having non-recommended NPIV, we will get the following error:

hscroote@hmc-liken:~> migrlpar -o v -m wilma -t visa --ip destiny4 -u hscroot -p

upt0060

Errors:

HSCLA340 The HMC may not be able to replicate the source multipath I/O

configuration for the migrating partition's virtual I/O adapters on the

destination. This means one or both of the following: (1) Client adapters

that are assigned to different source VIOS hosts may be assigned to a single

VIOS host on the destination; (2) Client adapters that are assigned to a single

source VIOS host may be assigned to different VIOS hosts on the destination.

You can review the complete list of HMC-chosen mappings by issuing the command

to list the virtual I/O mappings for the migrating partition.

HSCLA304 A suitable shared memory pool for the mobile partition was not found

on the destination managed system. In order to support the mobile partition,

the destination managed system must have a shared memory pool that can

accommodate the partition's entitled and maximum memory values, as well as its

redundant paging requirements. If the destination managed system has a shared

memory pool, inability to support the mobile shared memory partition can be due

to lack of sufficient memory in the pool, or lack of a paging space device in

the pool that meets the mobile partition's redundancy and size requirements.

Details:

HSCLA297 The DLPAR Resource Manager (DRM) capability bits 0x0 for mover service

partition (MSP) visav2 indicate that partition mobility functions are not

supported on the partition.

HSCLA2FF An internal Hardware Management Console error has occurred. If this

error persists, contact your service representative.

Warning:

HSCLA246 The HMC cannot communicate migration commands to the partition visav2.

Either the network connection is not available or the partition does not have a

level of software that is capable of supporting partition migration. Verify the

correct network and migration setup of the partition, and try the operation

again.

|

When we verify in VIOS:

Name Physloc ClntID ClntName ClntOS

----------- --------------------------------- ------- ------------ ------

vfchost3 U9117.MMB.100302P-V1-C14 5 upt0052 AIX

Status:LOGGED_IN

FC name:fcs0 FC loc code:U78C0.001.DBJ0563-P2-C1-T1

Ports logged in:35

Flags:a<LOGGED_IN,STRIP_MERGE>

VFC client name:fcs1 VFC client DRC:U8233.E8B.100244P-V5-C4-T1

|

Name Physloc ClntID ClntName ClntOS

----------- --------------------------------- ------- ------------ ------

vfchost3 U9117.MMB.100302P-V1-C13

Status:LOGGED_IN

FC name:fcs0 FC loc code:U78C0.001.DBJ0563-P2-C1-T1

Ports logged in:0

Flags:4<NOT_LOGGED>

VFC client name: VFC client DRC

|

Here the problem is vfchost3 and vfchost8 both mapped to same host (upt0058) and both mapped to same physical FC(fcs0). This is not the recommended setup. To fix this use either of these methods:

- We need to map one of the vfchost to another FC (fcs1) on the server which is connected to the switch.

- We can remove one of the vfchost through DLPAR.

- This error basically represents the incompatibility between the source and target FC adapters. The incompatibility can be due to a number of reasons in terms of characteristics of FC adapter (For many different kinds of FC incompatibility problems or mapping problems, we may get "return code of 69".)

hscroot@guandu5:~> migrlpar -o v -m flrx -t dash --ip destiny4 -u hscroot -p

upt0064

HSCLA319 The migrating partition's virtual fibre channel client adapter 4

cannot be hosted by the existing Virtual I/O Server (VIOS) partitions on

the destination managed system. To migrate the partition, set up the

necessary VIOS host on the destination managed system, then try the

operation again.

HSCLA319 The migrating partition's virtual fibre channel client adapter 3

cannot be hosted by the existing Virtual I/O Server (VIOS) partitions on

the destination managed system. To migrate the partition, set up the

necessary VIOS host on the destination managed system, then try the

operation again.

Details:

HSCLA356 The RMC command issued to partition dashv1 failed. This means that

destination VIOS partition dashv1 cannot host the virtual adapter 4 on the

migrating partition.

HSCLA29A The RMC command issued to partition dashv1 failed.

The partition command is:

migmgr -f find_devices -t vscsi -C 0x3 -d 1

The RMC return code is:

0

The OS command return code is:

69

The OS standard out is:

Running method '/usr/lib/methods/mig_vscsi'

69

The OS standard err is:

The search was performed for the following device description:

<vfc-server>

<generalInfo>

<version>2.0 </version>

<maxTransfer>1048576</maxTransfer>

<minVIOSpatch>0</minVIOSpatch>

<minVIOScompatability>1</minVIOScompatability>

<effectiveVIOScompatability>-1</effectiveVIOScompatability>

<numPaths>1</numPaths>

<numPhysAdapPaths>1</numPhysAdapPaths>

<numWWPN>34</numWWPN>

<adpInterF>2</adpInterF>

<adpCap>5</adpCap>

<linkSpeed>400</linkSpeed>

<numIniat>6</numIniat>

<activeWWPN>0xc0507601a6730036</activeWWPN>

<inActiveWWPN>0xc0507601a6730037</inActiveWWPN>

<nodeName>0xc0507601a6730036</nodeName>

<streamID>0x0</streamID>

<generalInfo>

<ras>

<partitionID>1</partitionID>

</ras>

<wwpn_list>

<wwpn>0x201600a0b84771ca</wwpn>

<wwpn>0x201700a0b84771ca</wwpn>

<wwpn>0x202400a0b824588d</wwpn>

<wwpn>0x203400a0b824588d</wwpn>

<wwpn>0x202500a0b824588d</wwpn>

<wwpn>0x203500a0b824588d</wwpn>

<wwpn>0x5005076303048053</wwpn>

<wwpn>0x5005076303098053</wwpn>

<wwpn>0x5005076303198053</wwpn>

<wwpn>0x500507630319c053</wwpn>

<wwpn>0x500507630600872d</wwpn>

<wwpn>0x50050763060b872d</wwpn>

<wwpn>0x500507630610872d</wwpn>

<wwpn>0x5005076306ib872d</wwpn>

<wwpn>0x500a098587e934b3</wwpn>

<wwpn>0x500a098887e934b3</wwpn>

<wwpn>0x20460080e517b812</wwpn>

<wwpn>0x20470080e517b812</wwpn>

<wwpn>0x201400a0b8476a74</wwpn>

<wwpn>0x202400a0b8476a74</wwpn>

<wwpn>0x201500a0b8476a74</wwpn>

<wwpn>0x202500a0b8476a74</wwpn>

<wwpn>0x5005076304108e9f</wwpn>

<wwpn>0x500507630410ce9f</wwpn>

<wwpn>0x50050763043b8e9f</wwpn>

<wwpn>0x50050763043bce9f</wwpn>

<wwpn>0x201e00a0b8119c78</wwpn>

<wwpn>0x201f00a0b8119c78</wwpn>

<wwpn>0x5001738003d30151</wwpn>

<wwpn>0x5001738003d30181</wwpn>

<wwpn>0x5005076801102be5</wwpn>

<wwpn>0x5005076801102dab</wwpn>

<wwpn>0x5005076801402be5</wwpn>

<wwpn>0x5005076801402dab</wwpn>

</wwpn_list>

<vfc-server>

|

The solution can be any one of the following (or it may fail due to other mismatching characteristic of target FC adapters):

- Make sure the characteristic of FC adapter is the same between source and target.

- Make sure the source and target adapters reach the same set of targets (check the zoning).

- Make sure that the FC adapter is connected properly.

Sometimes the configuration log at the time of validation or migration is required to debug the errors. To get the log, run the following command from source MSP:

NPIV mapping steps for LPM:

- Zone both NPIV WWN (World Wide Name) and SAN WWN together.

- Mask LUN's and NPIV client WWN together.

- Make sure the target source and target VIOS have a path to the SAN subsystem.

vSCSI mapping steps for LPM:

- Zone both source and target VIOS WWN and SAN WWN together.

- Make sure LUN is masked with source and target VIOS together from SAN subsystem.

Back to top

LPM enhancement in POWER7

As per the prerequisites for LPM section for doing LPM, the LPAR should not have any physical adapters, but if it is a POWER7, it can have Host Ethernet Adapter (Integrated Virtualized Ethernet) attached to it. However, for a POWER7 LPAR which you want to migrate to other POWER7 can have HEA attached to it, but we must create etherchannel on a newly created virtual adapter and HEA in aggregation mode. When we migrate at the target we see only virtual adapter configured with IP and etherchannel; HEA will not be migrated. Also, make sure the VLANs used in virtual adapters to create etherchannel are added to both source and target VIOS.

Before LPM:

# lsdev -Cc adapter

ent0 Available Logical Host Ethernet Port (lp-hea)

ent1 Available Logical Host Ethernet Port (lp-hea)

ent2 Available Logical Host Ethernet Port (lp-hea)

ent3 Available Logical Host Ethernet Port (lp-hea)

ent4 Available Virtual I/O Ethernet Port (l-lan)

ent7 Available Virtual I/O Ethernet Port (l-lan)

ent6 Available Virtual I/O Ethernet Port (l-lan)

ent7 Available Virtual I/O Ethernet Port (l-lan)

ent8 Available EtherChannel / 802.3ad Link Aggregation

ent9 Available EtherChannel / 802.3ad Link Aggregation

ent10 Available EtherChannel / 802.3ad Link Aggregation

ent11 Available EtherChannel / 802.3ad Link Aggregation

fcs0 Available C3-T1 Virtual Fibre Channel Adapter

fcs1 Available C3-T1 Virtual Fibre Channel Adapter

lhea0 Available Logical Host Ethernet Adapter (l-hea)

lhea1 Available Logical Host Ethernet Adapter (l-hea)

vsa0 Available LPAR Virtual Serial Adapter

[root@upt0017] /

|

In this case doing LPM is also a bit different compared to the earlier method; this has to be done from the LPAR using smitty (also called client side LPM), not from HMC. But, LPAR must install with SSH fileset to do LPM through smitty.

openssh.base.client

openssh.base.server

openssh.license

openssh.man.en_US

openssl.base

openssl.license

openssl.man.en_US

|

Use smitty to migrate an Power7 LPAR with HEA. Smit --> Applications will be the first step to do LPM from smitty.

# smit

System Management

Move cursor to desired item and press Enter

Software Installation and Maintenance

Software License Management

Mange Edition

Devices

System Storage Management *Physical & Logical Storage)

Security & User

Communication Applications and Services

Workload Partition Administration

Print Spooling

Advanced Accounting

Problem Determination

Performance & Resource Scheduling

System Environments

Processes & Subsystems

Applications

Installation Assistant

Electronic Service Agent

Cluster Systems Management

Using SMIT (information only)

|

After selecting "Applications", then select "Live Partition Mobility with Host Ethernet Adapter (HEA)" to proceed.

Move cursor to desired item and press Enter

Live Partition Mobility with Host Ethernet Adapter (HEA)

|

Next enter the required fields such as source and destination HMC and HMC users, source and destination managed system names, LPAR name.

Live Partition Mobility with Host Ethernet Adapter (HEA)

Type or select values in the entry fields.

Press Enter AFTER making all desired changes

[Entry Fields]

* Source HMC Hostname or IP address [destinty2]

* Source HMC Username [hscroot]

* Migration between two HMCs no

Remote HMC hostname or IP address [ ]

Remote HMC Username [ ]

*Source System [link]

* Destination System [king]

* Migrating Partition Name [upt0017]

* Migration validation only yes

|

Once the successful migration the smitty command output says OK.

Command Status

Command: OK stdout: yes Stderr: no

Before command completion, additional instruction may appear below.

Setting up SSH credentials wit destinty2

If prompted for a password, please enter password for user hscroot on HMC destinty2

Verifying EtherChannel configuration ...

Modifying EtherChannel configuration for mobility ...

Starting partition mobility process. This process is complete.

DO NOT halt or kill the migration process. Unexpected results may occur if the migration

process is halted or killed.

Partition mobility process is complete. The partition has migrated.

|

After successful LPM, all HEA's will be in defined state, but still the etherchannel between HEA and Virtual adapter exists and IP is still configured on Etherchannel.

[root@upt0017] /

# lsdev -Cc adapter

ent0 Defined Logical Host Ethernet Port (lp-hea)

ent1 Defined Logical Host Ethernet Port (lp-hea)

ent2 Defined Logical Host Ethernet Port (lp-hea)

ent3 Defined Logical Host Ethernet Port (lp-hea)

ent4 Available Virtual I/O Ethernet Adapter (l-lan)

ent5 Available Virtual I/O Ethernet Adapter (l-lan)

ent6 Available Virtual I/O Ethernet Adapter (l-lan)

ent7 Available Virtual I/O Ethernet Adapter (l-lan)

ent8 Available EtherChannel / IEEE 802.3ad Link Aggregation

ent9 Available EtherChannel / IEEE 802.3ad Link Aggregation

ent10 Available EtherChannel / IEEE 802.3ad Link Aggregation

ent11 Available EtherChannel / IEEE 802.3ad Link Aggregation

fcs0 Available C3-T1 Virtual Fibre Channel Client Adapter

fcs1 Available C4-T1 Virtual Fibre Channel Client Adapter

lhea0 Defined Logical Host Ethernet Adapter (l-hea)

lhea1 Defined Logical Host Ethernet Adapter (l-hea)

vsa0 Available LPAR Virtual Serial Adapter

[root@upt0017] /

# netstat -i

Name Mtu Network Address Ipkts Ierrs Opkts Oerrs Coll

en8 1500 link#2 0.21.5E.72.AE.40 9302210 0 819878 0 0

en8 1500 10.33 upt0017.upt.aust 9302210 0 819978 0 0

en9 1500 link#3 0.21.5e.72.ae.52 19667 0 314 2 0

en9 1500 192.168.17 upt0017e0.upt.au 19667 0 314 2 0

en10 1500 link#4 0.21.5e.72.ae.61 76881 0 1496 0 0

en10 1500 192.168.18 upt0017g0.upt.au 76881 0 1496 0 0

en11 1500 link#5 0.21.5e.72.ae.73 1665 0 2200 2 0

en11 1500 192.168.19 upt0017d0.upt.au 1665 0 2200 2 0

lo0 16896 link#1 1660060 0 160060 0 0

lo0 16896 loopback localhost '' 1660060 0 160060 0 0

lo0 16896 ::1%1 1660060 0 160060 0 0

[root@upt0017] /

#

|

Other enhancements for POWER7

Additional enhancements are:

- User defined Virtual Target Device names are preserved across LPM (vSCSI).

- Support for shared persistent (SCSI-3) reserves on LUNs of a migrating partition (vSCSI).

- Support for migration of a client across non-symmetric VIOS configurations. The migrations involve a loss of redundancy. It requires an HMC level V7r3.5.0 and the GUI "override errors" option or command line --force flag. It also allows for moving a client partition to a CEC whose VIOS configuration does not provide the same level of redundancy found on the source.

- The CLI interface to configure IPSEC tunneling for the data connection between MSPs.

- Support to allow the user to select the MSP IP addresses to use during a migration.

Back to top

Limitations

- LPM cannot be performed on a stand-alone LPAR; it should be a VIOS client.

- It must have virtual adapters for both network and storage.

- It requires PowerVM Enterprise Edition.

- The VIOS cannot be migrated.

- When migrating between systems, only the active profile is updated for the partition and VIOS.

- A partition that is in a crashed or failed state is not capable of being migrated.

- A server that is running on battery power is not allowed to be the destination of a migration. A server that is running on battery power may be the source of a migrating partition.

- For a migration to be performed, the destination server must have resources (for example, processors and memory) available that are equivalent to the current configuration of the migrating partition. If a reduction or increase of resources is required then a DLPAR operation needs to be performed separate from migration.

- This is not a replacement for PowerHA solution or a Disaster Recovery Solution.

- The partition data is not encrypted when transferred between MSPs.

Back to top

Conclusion

This article gives administrators, testers, and developers information so that they can configure and troubleshoot LPM. A step-by-step command line and GUI configuration procedure is explained for LPM activity. This article also explains prerequisites and limitations while performing LPM activity.

Resources

Learn

Discuss

About the authors

Raghavendra Prasannakumar works as a systems software engineer on the IBM AIX UPT release team in Bangalore, India and has worked there for 3 years. He has worked on AIX testing on Power series and on AIX virtualization features like VIOS, VIOSNextGen, AMS, NPIV, LPM, and AIX-TCP features testing. He has also worked on customer configuration setup using AIX and Power series. You can reach him atkpraghavendra@in.ibm.com.

Shashidhar Soppin works as a system software test specialist on the IBM AIX UPT release team in Bangalore, India. Shashidhar has over nine years of experience working on development tasks in RTOS, Windows and UNIX platforms and has been involved in AIX testing for 5 years. He works on testing various software vendors' applications and databases for pSeries servers running AIX. He specializes in Veritas 5.0 VxVM and VxFS configuration and installation, ITM 6.x installation and configuration and Workload Development tasks on AIX. He is an IBM Certified Advanced Technical Expert (CATE)-IBM System p5 2006. He holds patents and has been previously published. You can reach him at shsoppin@in.ibm.com.

Shivendra Ashish works as software engineer on the IBM AIX UPT release team in Bangalore, India. He has worked on AIX, PowerHA, PowerVM components on pSeries for the last 2 years at IBM India Software Labs. He also worked on various customer configurations and engagements using PowerHA, PowerVM, and AIX on pSeries. You can reach him at shiv.ashish@in.ibm.co

- h

ttp://www.ibm.com/developerworks/wikis/display/virtualization/Live+Partition+MobilityLive Partition Mobility (LPM) Nutshell

Neil Koropoff , 06/11/2012

, 06/11/2012

Access pdf version of the Live Partition Mobility (LPM) Nutshell

The information below is from multiple sources including:

This document is not intended to replace the LPM Redbook or other IBM documentation. It is only to summarize the requirements and point out fixes that have been released.Changes are in bold.

Major requirements for active Live Partition Mobility are:

Software Version Requirements:

Base LPM:

Hardware Management Console (HMC) minimum requirements

- Version 7 Release 3.2.0 or later with required fixes MH01062 for both active and inactive partition migration. If you do not have this level, upgrade the HMC to the correct level.

- Model 7310-CR2 or later, or the 7310-C03

- Version 7 Release 710 when managing at least one Power7 server.

Systems Director Management Console requirements

- Systems Director Management Console requirements would be the base SDMC and the minimum requirements for the machine types involved when attached to an SDMC.

Integrated Virtualization Manager (IVM) minimum requirements

- IVM is provided by the Virtual I/O Server at release level 1.5.1.1 or higher.

PowerVM minimum requirements:

- Both source and destination systems must have the PowerVM Enterprise Edition license code installed.

- Both source and destination systems must be at firmware level 01Ex320 or later, where x is an S for BladeCenter®, an L for Entry servers (such as the Power 520, Power 550, and Power 560), an M for Midrange servers (such as the Power 570) or an H for Enterprise servers (such as the Power 595).

Although there is a minimum required firmware level, each system may have a different level of firmware. The level of source system firmware must be compatible with the destination firmware. The latest firmware migration matrix can be found here:

http://publib.boulder.ibm.com/infocenter/powersys/v3r1m5/index.jsp?topic=/p7hc3/p7hc3firmwaresupportmatrix.htm

Note: When a partition mobility operation is performed from a Power6 system running at xx340_122 (or earlier) firmware level to a Power7 system running at a firmware level xx720_yyy, a BA000100 error log is generated on the target system, indicating that the partition is running with the partition firmware of the source system. The partition will continue to run normally on the target system, however future firmware updates cannot be concurrently activated on that partition. IBM recommends that the partition be rebooted in a maintenance window so the firmware updates can be applied to the partition on the target system.

If the partition has been booted on a server running firmware level xx340_132 (or later), it is not subject to the generation of an error log after a mobility operation.

Source and destination Virtual I/O Server minimum requirements

- At least one Virtual I/O Server at release level 1.5.1.1or higher has to be installed both on the source and destination systems.

- Virtual I/O Server at release level 2.12.11 with Fix Pack 22.1 and Service Pack 1, or later for Power7 servers.

Client LPARs using Shared Storage Pool virtual devices are now supported for Live LPAR Mobility (LPM) with the PowerVM V2.2 refresh that shipped on 12/16/2011.

Operating system minimum requirements

The operating system running in the mobile partition has to be AIX or Linux. A Virtual I/O Server logical partition or a logical partition running the IBM i operating system cannot be migrated. The operating system must be at one of the following levels:

Note: The operating system level of the lpar on the source hardware must already meet the minimum operating system requirements of the destination hardware prior to a migration.

- AIX 5L™ Version 5.3 Technology Level 7 or later (the required level is 5300-07-01) or AIX Version 5.3 Technology Level 09 and Service Pack 7 or later for Power7 servers.

- AIX Version 6.1 or later (the required level is 6100-00-01) or AIX Version 6.1 Technology Level 02 and Service Pack 8 or later.

- Red Hat Enterprise Linux Version 5 (RHEL5) Update 1 or later (with the required kernel security update)

- SUSE Linux Enterprise Server 10 (SLES 10) Service Pack 1 or later (with the required kernel security update)

Active Memory Sharing (AMS) software minimum requirements:

Note: AMS is not a requirement to perform LPM and is listed because both are features of PowerVM Enterprise Edition but AMS has more restrictive software requirements in case you plan to use both.

- PowerVM Enterprise activation

- Firmware level 01Ex340_075

- HMC version 7.3.4 service pack 2 (V7R3.4.0M2) for HMC managed systems

- Virtual I/O Server Version 2.1.0.1-FP21 for both HMC and IVM managed systems

- AIX 6.1 TL 3

- Novell SuSE SLES 11

See above requirements for Power7 servers.

Virtual Fibre Channel (NPIV) software minimum requirements:

- HMC Version 7 Release 3.4, or later

- Virtual I/O Server Version 2.1 with Fix Pack 20.1, or later (V2.1.3 required for NPIV on FCoCEE)

- AIX 5.3 TL9, or later

- AIX 6.1 TL2 SP2, or later

Configuration Requirements:

Base LPM:

Source and destination system requirements for HMC managed systems:

The source and destination system must be an IBM Power Systems POWER6 technology- based model such as:

- 8203-E4A (IBM Power System 520 Express)

- 8231-E2B, E1C (IBM Power System 710 Express)

- 8202-E4B, E4C (IBM Power System 720 Express)

- 8231-E2B, E2C (IBM Power System 730 Express)

- 8205-E6B, E6C (IBM Power System 740 Express)

- 8204-E8A (IBM Power System 550 Express)

- 8234-EMA (IBM Power System 560 Express)

- 9117-MMA (IBM Power System 570)

- 9119-FHA (IBM Power System 595)

- 9125-F2A (IBM Power System 575)

- 8233-E8B (IBM Power System 750 Express)

- 9117-MMB, MMC (IBM Power System 770)

- 9179-MHB, MHC (IBM Power System 780)

- 9119-FHB (IBM Power System 795)

Source and destination system requirements for IVM managed systems:

- 8203-E4A (IBM Power System 520 Express)

- 8204-E8A (IBM Power System 550 Express)

- 8234-EMA (IBM Power System 560 Express)

- BladeCenter JS12

- BladeCenter JS22

- BladeCenter JS23

- BladeCenter JS43

- BladeCenter PS700

- BladeCenter PS701

- BladeCenter PS702

- BladeCenter PS703

- BladeCenter PS704

- 8233-E8B (IBM Power System 750 Express)

- 8231-E2B, E1C (IBM Power System 710 Express)

- 8202-E4B, E4C (IBM Power System 720 Express)

- 8231-E2B, E2C (IBM Power System 730 Express)

- 8205-E6B, E6C (IBM Power System 740 Express)

Source and destination system requirements for Flexible Systems Manager (FSM) managed systems:

- 7895-22X (IBM Flex System p260 Compute Node)

- 7895-42X (IBM Flex System p460 Compute Node)

A system is capable of being either the source or destination of a migration if it contains the necessary processor hardware to support it.

Source and destination Virtual I/O Server minimum requirements

- A new partition attribute, called the mover service partition, has been defined that enables you to indicate whether a mover-capable Virtual I/O Server partition should be considered during the selection process of the MSP for a migration. By default, all Virtual I/O Server partitions have this new partition attribute set to FALSE.

- In addition to having the mover partition attribute set to TRUE, the source and destination mover service partitions communicate with each other over the network. On both the source and destination servers, the Virtual Asynchronous Services Interface (VASI) device provides communication between the mover service partition and the POWER Hypervisor.

Storage requirements

For a list of supported disks and optical devices, see the Virtual I/O Server

data sheet for VIOS:

http://www14.software.ibm.com/webapp/set2/sas/f/vios/documentation/datasheet.html

Make sure the reserve_lock on SAN disk is set to no_reserve.

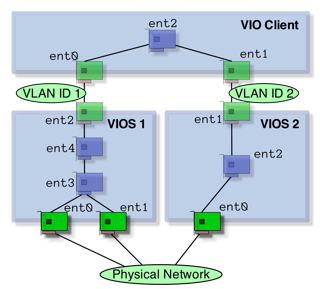

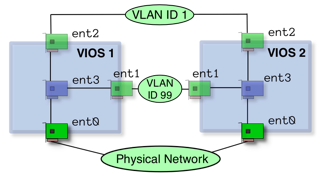

Network requirements

The migrating partition uses the virtual LAN (VLAN) for network access. The VLAN must be bridged (if there is more than one, then it also has to be bridged) to a physical network using a shared Ethernet adapter in the Virtual I/O Server partition. Your LAN must be configured so that migrating partitions can continue to communicate with other necessary clients and servers after a migration is completed.

Note: VIOS V2.1.3 adds support of 10 Gb FCoE Adapters (5708, 8275) on the Linux and IBM AIX operating systems by adding N_Port ID Virtualization (NPIV).+

Requirements for remote migration

The Remote Live Partition Mobility feature is available starting with HMC Version 7 Release 3.4.

This feature allows a user to migrate a client partition to a destination server that is managed by a different HMC. The function relies on Secure Shell (SSH) to communicate with the remote HMC.

The following list indicates the requirements for remote HMC migrations:

- A local HMC managing the source server

- A remote HMC managing the destination server

- Version 7 Release 3.4 or later HMC version

- Network access to a remote HMC

- SSH key authentication to the remote HMC

The source and destination servers, mover service partitions, and Virtual I/O Servers are required to be configured exactly as though they were going to be performing migrations managed by a single HMC.

To initiate the remote migration operation, you may use only the HMC that contains the mobile partition.

Active Memory Sharing configuration

An IBM Power System server based on the POWER6 or POWER7 processor

Virtual Fibre Channel (NPIV) configuration

Virtual Fibre Channel is a virtualization feature. Virtual Fibre Channel uses N_Port ID Virtualization (NPIV), and enables PowerVM logical partitions to access SAN resources using virtual Fibre Channel adapters mapped to a physical NPIV-capable adapter.

The mobile partition must meet the requirements described above. In addition, the following components must be configured in the environment:

- An NPIV-capable SAN switch

- An NPIV-capable physical Fibre Channel adapter on the source and destination Virtual I/O Servers

- Each virtual Fibre Channel adapter on the Virtual I/O Server mapped to an NPIV-capable physical Fibre Channel adapter

- Each virtual Fibre Channel adapter on the mobile partition mapped to a virtual Fibre Channel adapter in the Virtual I/O Server

- At least one LUN mapped to the mobile partition's virtual Fibre Channel adapter

- Mobile partitions may have virtual SCSI and virtual Fibre Channel LUNs. Migration of LUNs between virtual SCSI and virtual Fibre Channel is not supported at the time of publication.

Fixes that effect LPM:

Firmware Release Ex340_095:

On systems running system firmware Ex340_075 and Active Memory Sharing, a problem was fixed that might have caused a partition to lose I/O entitlement after the partition was moved from one system to another using PowerVM Mobility.

Firmware Release Ex350_063

A problem was fixed which caused software licensing issues after a live partition mobility operation in which a partition was moved to an 8203-E4A or 8204-E8A system.

Firmware Release Ex350_103

HIPER: IBM testing has uncovered a potential undetected data corruption issue when a mobility operation is performed on an AMS (Active Memory Sharing) partition. The data corruption can occur in rare instances due to a problem in IBM firmware. This issue was discovered during internal IBM testing, and has not been reported on any customer system. IBM recommends that systems running on EL340_075 or later move to EL350_103 to pick up the fix for this potential problem. (Firmware levels older than EL340_075 are not exposed to the problem.)

Firmware Release AM710_083

A problem was fixed that caused SRC BA210000 to be erroneously logged on the target system when a partition was moved (using Live Partition Mobility) from a Power7 system to a Power6 system.

A problem was fixed that caused SRC BA280000 to be erroneously logged on the target system when a partition was moved (using Live Partition Mobility) from a Power7 system to a Power6 system.

Firmware Release AM720_082

A problem was fixed that caused the system ID to change, which caused software licensing problems, when a live partition mobility operation was done where the target system was an 8203-E4A or an 8204-E8A.

A problem was fixed that caused SRC BA210000 to be erroneously logged on the target system when a partition was moved (using Live Partition Mobility) from a Power7 system to a Power6 system.

A problem was fixed that caused SRC BA280000 to be erroneously logged on the target system when a partition was moved (using Live Partition Mobility) from a Power7 system to a Power6 system.

A problem was fixed that caused a partition to hang following a partition migration operation (using Live Partition Mobility) from a system running Ax720 system firmware to a system running Ex340, or older, system firmware.

Firmware Release Ax720_090

HIPER: IBM testing has uncovered a potential undetected data corruption issue when a mobility operation is performed on an AMS (Active Memory Sharing) partition. The data corruption can occur in rare instances due to a problem in IBM firmware. This issue was discovered during internal IBM testing, and has not been reported on any customer system.

VIOS V2.1.0

Fixed problem with disk reservation changes for partition mobility

Fixed problem where Mobility fails between VIOS levels due to version mismatch

VIOS V2.1.2.10FP22

Fixed problems with NPIV LP Mobility

Fixed problem with disk reservation changes for partition mobility

Fixed problem where Mobility fails between VIOS levels due to version mismatch

VIOS V2.1.2.10FP22.1

Fixed a problem with NPIV Client; reconnect failure

VIOS V2.1.2.10FP22.1 NPIV Interim Fix

Fixed a problem with the NPIV vfc adapters

VIOS V2.1.3.10FP23 IZ77189 Interim Fix

Corrects a problem where an operation to change the preferred path of a LUN can cause an I/O operation to become stuck, which in turn causes the operation to hang.

VIOS V2.2.0.10 FP-24

Fixed problems with Live partition mobility Fix Pack 24 (VIOS V2.2.0.10 FP-24)

Documented limitation with shared storage pools

VIOS V2.2.0.12 FP-24 SP 02, V2.2.0.13 FP-24 SP03

Fixed a potential VIOS crash issue while running AMS or during live partition mobility

Fixed an issue with wrong client DRC name after mobility operation

Change history:

10/20/2009 - added SoD for converged network adapters.

04/15/2010 - added NPIV support for converged network adapters.

09/13/2010 - added new Power7 models

01/25/2011 - added OS source to destination requirement, updated fix information

03/28/2011 - added BA000100 error log entry on certain firmware levels and firmware fixes.

10/17/2011 - added new Power7 models and SDMC information. 01/27/2012 - corrected new location for supported firmware matrix, LPM now supported with SSPs. 06/11/2012 - corrected availability of NPIV with FCoE adapters, added Flex Power nodes.

env_chk.sh

env_chk.sh